This code is attached to a command button on a form. On the form are the fields txtProgress which displays various messages as well as lblStatus which displays a record count of the progress.

| Overview | How Do I | FAQ | Sample | | ODBC Driver List

This article discusses several key recordset operations, particularly those involving updating records. Topics covered include:

For related information about using recordsets, see the following articles:

Important Your ability to update records requires that you have update permission for your database and that you have an updatable table-type or dynaset-type recordset. You can't update snapshot-type recordsets with the MFC DAO classes.

Whole blogospear was buzzing with the launch of Google Chrome Browser. However Google entering into Browser war was totally unexpected, it has created ditch like feeling after supporting open source Firefox and now launching its own browser.

There are several concern raised with Google Chrome Browser, one biggest concern among chrome users is Google Chrome Privacy Policy. With this privacy policy, Google gets enough power to get smallest details like which user is surfing what.

Google Chrome Browser is based on Chromium-Source which is open source project, SRWare has used this open source Chromium to build Iron browser which is a clone of Google Chrome and emulates exactly same features like Google Chrome.

Iron browser has disabled following tracking features

Client id – every Google Chrome Browser has unique client id to track users surfing habits, Google Suggest, Timestamp of installation, URL tracker, RLZ tracking, Chrome Updater Alternet Error Page and Error Reporting.

Another good thing about Iron Browser is, it uses latest version of Webkit 525.19.

DOWNLOAD Iron Browser, the Google Chrome Clone

DOWNLOAD PORTABLE Iron Browser, the Google Chrome Clone

DOWNLOAD SOURCE CODE for Iron Browser, the Google Chrome Clone 1 2 3 4

Background Reading (for interest only)

Balter pages 672 - 684

( Access 2000 Balter pages 430 - 468 Jennings Chapter 27, pages 1032 - 1082 )Commentary

Microsoft currently provide two different ways of accessing data (strictly interface definitions), Data Access Objects (DAO) and ActiveX Data Objects (ADO). DAO was the only model in Visual Basic up to VB5 and in Access up to Access 97. With VB6 and from Access 2000, Microsoft have made both DAO and ADO available.Microsoft have also made it clear that their intention is to move to ADO exclusively in the long term. Certain important products/environments such as ASP scripting using VB Script only support ADO and anyone planning to use Microsoft products long term should learn the use of ADO.

As stated below, a database created in Access 2003 uses DAO by default as does a database created in Access 97 but Access2000 uses ADO. So the default in the databases we have used so far, which were converted from Access 97.

For this reason, and because it is a bit simpler, the rest of this module will use DAO objects and methods to be consistent with the methods on the default objects created by Access.

If you set up a new database in Access 2003 and then open the VBA IDE (either from a Form or a new Module) and then choose Tools, References from the Toolbar, you will get a window showing the places VBA will look for definitions of language methods etc. What you will see is

The PROJCONT (and other) databases supplied with these notes originate from Access 97 databases and so have the DAO references and will execute the DAO code.

Very Important If you create a database in Access 2000 and you want to write DAO code in it, you must change the references from the native Access 2000 list to the native Access 97 list as shown above - otherwise you will get a series of inexplicable and meaningless error messages as Access tries to execute the commands in their ADO form!

If you create a database in Access2003 and intend to write data access VBA, you should unselect the library for the data access objects you don't intend to use.

| Access 97 can develop | Access2000 can develop | Access2003 can develop | |

| Access97 format | Yes | Can convert (both ways) but not develop | Can convert (both ways) but not develop |

| Access2000 format | No | Yes | Yes (can also create) |

| Access2002-3 format | No | No | Yes |

If you want to match the position of the displayed data with that in the RecordsetClone, you can do this with the Bookmark properties of the two Recordsets by assigning the value of the Bookmark of the clone to Me.Bookmark (implicitly the Bookmark property of the recordset underlying the form).

The following is an extract from the Help entry for RecordsetClone.

Setting

The RecordsetClone property setting is a copy of the underlying query or table specified by the form's RecordSource property. If a form is based on a query, for example, referring to the RecordsetClone property is the equivalent of cloning a Recordset object by using the same query. If you then apply a filter to the form, the Recordset object reflects the filtering.

This property is available only by using Visual Basic and is read-only in all views.

Option Compare Database

Option ExplicitPrivate Sub Command10_Click()

With Me.RecordsetClone

.MoveFirst

Me.Bookmark = .Bookmark

Do While Not .EOF

Debug.Print !Custno, !Customer

.MoveNext

Loop

End WithEnd Sub

Private Sub Command11_Click()

With Me.RecordsetClone

.MoveFirst

Me.Bookmark = .BookmarkDo While Not .EOF

Debug.Print !Custno, !Customer

.MoveNext

If Not .EOF Then

Me.Bookmark = .Bookmark

End If

Loop

End WithEnd Sub

SHAH ALAM 26 Ogos - Percikan darah pada sepasang selipar yang dijumpai dalam jip Suzuki Vitara dan penemuan beberapa barang peribadi Altantuya Shaariibuu membuktikan wanita Mongolia terbabit berada bersama Cif Inspektor Azilah Hadri dan Koperal Sirul Azhar Umar, sebelum dibunuh.

Timbalan Pendakwa Raya, Tun Abdul Majid Tun Hamzah berhujah di Mahkamah Tinggi di sini hari ini bahawa terdapat bukti kukuh untuk menyokong keterangan mengikut keadaan bagi membuktikan elemen niat bersama seperti dinyatakan dalam pertuduhan.

Menurutnya, rekod transaksi telefon bimbit (panggilan suara) mahupun khidmat pesanan ringkas (SMS) jelas menunjukkan Azilah dan Sirul Azhar ada berkomunikasi dalam waktu yang penting itu.

''Begitu juga dengan transaksi penggunaan kad Touch' n Go ( P119) dan kad Smart Tag yang dijumpai dalam kenderaan pacuan empat roda Suzuki 1300 bernombor pendaftaran CAC 1883, jelas menunjukkan pergerakan Sirul.

source : utusan

You will be sent a welcome e-mail and an e-mail to activate your account.

Click the link in the activation e-mail to enable your account and allow you to login.

On your subsequent visits please click the sign in button and then enter your email address and password.

5. Click activation link

You will be receiving an e-mail that request you to click on the link provided.

6. Registration processed is completed.

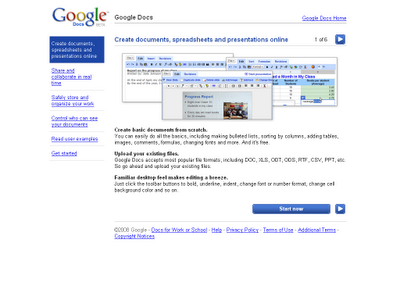

Documents, spreadsheets, and presentations can be created within the application itself, imported through the web interface, or sent via email. They can also be saved to the user's computer in a variety of formats. By default, they are saved to Google's servers. Open documents are automatically saved to prevent data loss, and a full revision history is automatically kept. Documents can be tagged and archived for organizational purposes.

Collaboration between users is also a feature of Google Docs. Documents can be shared, opened, and edited by multiple users at the same time. In the case of spreadsheets, users can be notified of changes to any specified regions via e-mail.

The application supports popular Office file types such as .doc or .xls. It also includes support for the OpenDocument format[2].

It is also possible to upload and share PDF files.

First-time computer buyers used to buy desktops for menial tasks like word processing, checking e-mail and Internet browsing, or managing checkbooks and recipes. It's become much simpler for the consumer to use their new computer as a multimedia machine, managing photos, music, and even movies with a DVD drive.

Dedicated game players will likely want a faster computer with more memory, but most entry-level computers (at entry-level prices) have enough speed, memory and storage to handle all these tasks. Powerful computers are becoming more affordable, and many budget models will let you burn CDs, run sophisticated operating systems and play the latest video games right out of the box.

It's never been easier or less expensive to buy a state-of-the-art personal computer. It wasn't long ago that computer manufacturers were striving to come up with a sub-$1,000 PC. For a while, there were even stripped-down, basic machines on the market for less than $500, though the poor profit margins on these systems have pretty much forced them from the market.

The winner here is, of course, you. Options for the PC buyer abound like never before. Keep in mind that "basic" is a very relative term. Even the most basic PCs these days provide features and performance that are astonishing.

Before you decide anything, decide how much you can spend. After that, take a look at what you want out of your computer -- is this the first home computer for you and your family? Will this be for your college-bound son or daughter for the next four years? Are you anticipating the newest game releases? The tasks you want to accomplish will dictate the specifications you need in your new computer.

Your choices begin just with the shape of the computer itself. For a cramped office or living space, small-form-factor (or SFF) computers are an easy solution. Their main disadvantage is that with a small cabinet, there are less expansion slots, and therefore less room for upgrading. There's still plenty of ports, however, and it's a perfect temporary solution for dorm rooms and LAN-party gamers, sometimes even equipped with handles for easy transport.

If space isn't a concern, a mid- or full-tower case ensures that you'll have the room to upgrade your computer for years to come. If space is really limited, the all-in-one case is a desktop built into a flat-panel monitor. It's just as limiting when it comes to expansion slots, but it takes up little to no space (and is pretty cool-looking).

Your options for processors can be confusing, with competition between AMD, Intel and Apple making it hard to tell which processor is best. Pay attention to the speed of the processor, measured in gigahertz (GHz), to know what you're paying for. Even budget-priced desktops should run near 1.4 GHz, and over 2 GHz if you have more money to spend.

If your budget is tight, scaling back on the processor might be the best way to lower the cost. The processor is the brain of your computer, and dictates how fast your applications will run, but most programs run just fine without the high clock speeds at the top of the market now.

The PC's main memory, where its operating system and programs are run, is called RAM (random access memory). RAM is relatively inexpensive, and operating systems and applications are becoming ever more memory-hungry. The minimum amount of RAM you should get is 256MB, but many PCs offer 512MB or even 1GB and over. More RAM is always better, as your applications will generally run faster with more RAM available, and Windows XP runs best on at least 512MB.

New computers often feature graphics cards that are "integrated," meaning they draw on the same memory as the rest of your applications to generate graphics. For most users, this is fine.

Those who need a desktop for graphically-intensive business programs, video editing, or the newest in video games, a discrete video card is better. These cards have their own pool of memory to draw from. The lion's share of upscale video cards are manufactured by ATI or NVIDIA, so look for one of those names if your graphics card is built-in to your new computer, and aim for the highest amount of discrete RAM that's affordable for you.

Traditional floppy drives are often optional features on new computers. Any manner of ROM drive is available for your desktop. Combination drives are included with many new models, able to read from CD and DVD, burn media to CD, or, if dealing with large amounts of files, burn to DVD and Double Layer DVD to exchange and transport large files and multimedia files. Consider how many media files you'll be using, and how large, and decide whether your computer will be doubling as a DVD player on occasion before making your choice.

Many desktops come with ports for both dial-up modem and an Ethernet connection already built-in to the back of your computer, so your computer is ready to connect anywhere. Ethernet cards are required for broadband Internet connections or connecting to networks (like at a college campus), so know beforehand how you'll be connected.

If you have more than one computer at home, adding wireless cards to each is a handy solution for home networking. Also behind your desktop, USB ports will allow you to connect to peripherals like digital cameras, portable music players and other devices to transfer files back and forth. Many desktops have FireWire ports, and while that technology isn't as ubiquitous as USB 2.0, you can transfer data back and forth even faster if your peripheral is FireWire-ready.

You should look for at least four USB slots, able to accommodate constant peripherals like printers and keyboards, and have room for recreational ones like digital cameras and music devices. Some models have USB ports in the front as well, making connections even more convenient.

The average desktop warranty will last one year for parts and labor. Some companies provide on-site service, and will send a technician to your home if a problem can't be fixed over the phone or online. Others require dropping it off at a local service center or shipping it directly to the manufacturer. If that's the case, find out who pays for shipping.

Many companies offer extensions on the warranty, sometimes up to three additional years, for an added cost. The decision of adding onto the warranty rests with you. Deciding factors can include: whether you are comfortable making repairs yourself (or letting a friend or relative do so), as well as how much you spent on the system.

Verification ensures that the final product satisfies or matches the original design (low-level checking) — i.e., you built the product right. This is done through static testing.

Validation checks that the product design satisfies or fits the intended usage (high-level checking) — i.e., you built the right product. This is done through dynamic testing and other forms of review.

According to the Capability Maturity Model (CMMI-SW v1.1), “Validation - The process of evaluating software during or at the end of the development process to determine whether it satisfies specified requirements. [IEEE-STD-610] Verification- The process of evaluating software to determine whether the products of a given development phase satisfy the conditions imposed at the start of that phase. [IEEE-STD-610]."

In other words, verification is ensuring that the product has been built according to the requirements and design specifications, while validation ensures that the product actually meets the user's needs, and that the specifications were correct in the first place. Verification ensures that ‘you built it right’. Validation confirms that the product, as provided, will fulfill its intended use. Validation ensures that ‘you built the right thing’.

http://en.wikipedia.org/wiki/Verification_and_Validation_%28software%29In Royce's original waterfall model, the following phases are followed in order:

To follow the waterfall model, one proceeds from one phase to the next in a purely sequential manner. For example, one first completes requirements specification, which are set in stone. When the requirements are fully completed, one proceeds to design. The software in question is designed and a blueprint is drawn for implementers (coders) to follow — this design should be a plan for implementing the requirements given. When the design is fully completed, an implementation of that design is made by coders. Towards the later stages of this implementation phase, disparate software components produced by different teams are integrated. After the implementation and integration phases are complete, the software product is tested and debugged; any faults introduced in earlier phases are removed here. Then the software product is installed, and later maintained to introduce new functionality and remove bugs.

Thus the waterfall model maintains that one should move to a phase only when its preceding phase is completed and perfected. Phases of development in the waterfall model are discrete, and there is no jumping back and forth or overlap between them.

However, there are various modified waterfall models (including Royce's final model) that may include slight or major variations upon this process.

Computer-aided software engineering (CASE) is the use of software tools to assist in the development and maintenance of software. Tools used to assist in this way are known as CASE Tools.

Some typical CASE tools are:

All aspects of the software development lifecycle can be supported by software tools, and so the use of tools from across the spectrum can, arguably, be described as CASE; from project management software through tools for business and functional analysis, system design, code storage, compilers, translation tools, test software, and so on.

However, it is the tools that are concerned with analysis and design, and with using design information to create parts (or all) of the software product, that are most frequently thought of as CASE tools. CASE applied, for instance, to a database software product, might normally involve:

Steve McConnell, in his book Rapid Development, details a number of ways users can inhibit requirements gathering:

This may lead to the situation where user requirements keep changing even when system or product development has been started.

Agile software development is a conceptual framework for software engineering that promotes development iterations throughout the life-cycle of the project.

There are many agile development methods; most minimize risk by developing software in short amounts of time. Software developed during one unit of time is referred to as an iteration, which may last from one to four weeks. Each iteration is an entire software project: including planning, requirements analysis, design, coding, testing, and documentation. An iteration may not add enough functionality to warrant releasing the product to market but the goal is to have an available release (without bugs) at the end of each iteration. At the end of each iteration, the team re-evaluates project priorities.

Agile methods emphasize face-to-face communication over written documents. Most agile teams are located in a single open office sometimes referred to as a bullpen. At a minimum, this includes programmers and their "customers" (customers define the product; they may be product managers, business analysts, or the clients). The office may include testers, interaction designers, technical writers, and managers.

Agile methods also emphasize working software as the primary measure of progress. Combined with the preference for face-to-face communication, agile methods produce very little written documentation relative to other methods. This has resulted in criticism of agile methods as being undisciplined.

The software crisis was a term used in the early days of software engineering, before it was a well-established subject. The term was used to describe the impact of rapid increases in computer power and the complexity of the problems which could be tackled. In essence, it refers to the difficulty of writing correct, understandable, and verifiable computer programs. The roots of the software crisis are complexity, expectations, and change.

Conflicting requirements have always hindered the software development process. For example, while users demand a large number of features, customers generally want to minimise the amount they must pay for the software and the time required for its development.

The term software crisis was coined by F. L. Bauer at the first NATO Software Engineering Conference in 1968 at Garmisch, Germany. An early use of the term is in Edsger Dijkstra's 1972 ACM Turing Award Lecture, "The Humble Programmer" (EWD340), published in the Communications of the ACM. Dijkstra states:

[The major cause of the software crisis is] that the machines have become several orders of magnitude more powerful! To put it quite bluntly: as long as there were no machines, programming was no problem at all; when we had a few weak computers, programming became a mild problem, and now we have gigantic computers, programming has become an equally gigantic problem.

– Edsger Dijkstra, The Humble Programmer

The causes of the software crisis were linked to the overall complexity of the software process and the relative immaturity of software engineering as a profession. The crisis manifested itself in several ways:

Various processes and methodologies have been developed over the last few decades to "tame" the software crisis, with varying degrees of success. However, it is widely agreed that there is no "silver bullet" ― that is, no single approach which will prevent project overruns and failures in all cases. In general, software projects which are large, complicated, poorly-specified, and involve unfamiliar aspects, are still particularly vulnerable to large, unanticipated problems.

http://en.wikipedia.org/wiki/Software_crisis